Privacy in the Age of AI

You’re not being watched. You’re being priced.

People love saying it.

“I have nothing to hide.”

They say it like they’ve just solved privacy. Like they’ve transcended paranoia and reached some enlightened state of digital zen.

Cool.

That line made sense in a world where surveillance meant a human watching a human.

This is not that world.

No one is reading your messages like a bored detective with a coffee stain on their shirt.

They’re building a model of you the way a casino studies a gambler.

Not to judge you.

To price you.

And AI makes that pricing cheap enough to do to millions of people at once… quietly… while you’re still telling yourself you’re “just browsing.”

The “nothing to hide” crowd is arguing with a ghost

You think privacy is about secrets.

Cheating.

Crime.

Your weird search history at 2:13 a.m.

That’s adorable. But privacy in 2025 is not about hiding… it’s about leverage.

Who gets to predict your next move.

Who gets to run experiments on your behavior.

Who gets to decide what version of reality you see on your screen.

Because if a company can predict what you’ll do when you’re rushed, stressed, broke, lonely, or desperate… they don’t need to “spy” on you in the movie sense.

They just need to nudge you.

And then charge you accordingly.

Here’s the part nobody wants to say out loud

We’ve entered the age of surveillance pricing.

That’s not me being dramatic. That’s literally what civil liberties folks and consumer watchdogs are calling it.

It’s not the old dynamic pricing you can at least understand.

Not “demand is up, price goes up.”

This is:

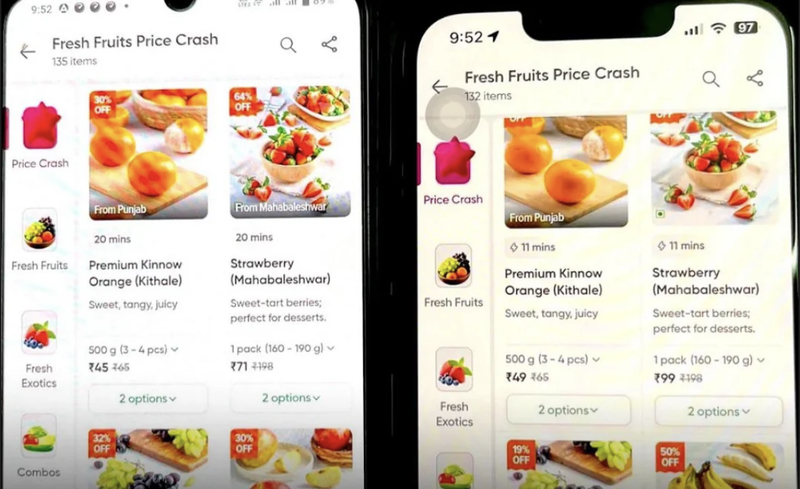

Same product.

Same city.

Same minute.

Different human.

Different price.

And the decision is increasingly made by systems trained on exactly the kind of data you don’t think matters.

Your device.

Your browsing rhythm.

Your purchase history.

Your location.

Your patterns.

Your patience.

Your likelihood of giving up and paying.

That’s the shift.

Privacy wasn’t killed by some supervillain.

It got quietly rebranded as “personalization.”

Real world evidence: it’s not a theory anymore

Let’s put some receipts on the table.

Consumer Reports documented cases where online grocery prices differed between users for identical items, raising serious concerns about individualized pricing and opaque experiments.

And Reuters reported the U.S. Federal Trade Commission is investigating Instacart’s AI pricing tool. That’s not “Twitter paranoia.” That’s federal scrutiny.

This matters because groceries aren’t luxury goods.

They’re baseline survival.

If pricing is personalized there, it’s not “innovation.” It’s a new way to tax the most predictable parts of your life.

Meanwhile, airlines have been flirting openly with AI driven pricing tools and triggering backlash and scrutiny around whether these systems enable unfair individualized fares.

So no, this isn’t a dystopian thought experiment.

This is Tuesday.

“But it’s convenient” is how the trap works

Every extraction system comes with a gift.

Faster checkout.

Smarter recommendations.

Auto filled forms.

“Just works.”

Convenience is the bribe.

The cost is that you become legible.

And once you’re legible, you’re predictable.

And once you’re predictable, you’re monetizable.

That’s the entire pipeline.

And AI is the turbocharger.

The PR translation you didn’t ask for

When a company says:

“Personalization.”

They often mean:

“We will quietly reshape your options until you do what’s profitable.”

When they say:

“Improving your experience.”

They often mean:

“We’re running A B tests on your behavior and calling it product development.”

When they say:

“We respect your privacy.”

They often mean:

“We respect it the way a vacuum cleaner respects dust.”

The AI boom has a hunger problem

Here’s where it gets spicy.

AI doesn’t run on magic.

It runs on data.

And the clean, high quality public internet data that fueled the first wave of models is not infinite.

It’s being paywalled.

It’s being litigated.

It’s being polluted by AI generated junk.

So companies need fresh human input… not just static text, but living context.

Your corrections.

Your preferences.

Your local language.

Your culture.

Your daily decisions.

That’s why “free” AI shows up everywhere like a friendly puppy.

It’s not charity.

It’s a supply chain.

India isn’t the user base. It’s the dataset

India is a high volume, high diversity, high velocity environment.

That makes it priceless to anyone trying to build global models.

Not because Indians are “cheap labor” in the old sense.

Because behavior at scale is the new oilfield.

Every prompt you type.

Every rewrite.

Every correction.

Every time you pick “more formal” or “more casual.”

That’s feedback.

That’s training signal.

That’s alignment data.

And it compounds.

So when someone says “I’m using AI for free,” the honest answer is:

No.

You’re paying.

Just not with money.

A necessary correction: not every company is evil

Let’s be careful here.

This isn’t a conspiracy meeting where CEOs sit around a candlelit table plotting how to ruin your day.

It’s something worse.

It’s incentives.

Markets reward companies that extract more per person.

Data makes extraction cheaper.

AI makes it scalable.

And the user… is the only one asked to trust blindly.

So no, it’s not “evil.”

It’s efficient.

Which is often more dangerous.

The actual definition of privacy (the one that matters)

Privacy is not secrecy.

Privacy is not shame.

Privacy is not “I have nothing to hide.”

Privacy is symmetry.

It’s you being able to understand:

What’s collected.

What’s inferred.

What’s sold.

What’s used to decide what you pay.

And being able to say no without being punished with worse service.

Anything else is just surveillance with a user friendly interface.

The end game

AI didn’t invent capitalism.

It just made it sharper.

Now the market doesn’t just respond to demand.

It predicts demand.

It shapes demand.

It prices your emotions.

And then it calls that “innovation.”

So sure.

Keep saying “I have nothing to hide.”

Just understand what you’re really saying:

“I’m okay being modeled.”

“I’m okay being nudged.”

“I’m okay paying a different price than the person next to me.”

Because the system isn’t trying to catch you doing something wrong.

It’s trying to find the exact point where you stop resisting.